Molly is a social worker in London working in children’s social care. She works as part of the “Front Door team”, fielding calls from concerned citizens about children's welfare. Molly acts as a first contact point; it is her role to determine whether the call warrants further investigation. This is no easy task.

Molly is working under intense time pressure. She is also working with incomplete information and is constrained by the limits of her brain’s processing power; she is making decisions under what behavioural psychologists call “conditions of uncertainty”.

How humans make decisions

In the 1940s, Herbert Simon coined the term “bounded rationality” to explain how humans, like Molly, make decisions. He explains:

“It is impossible for the behaviour of a single, isolated individual to reach any high degree of rationality. The number of alternatives he must explore is so great, the information he would need to evaluate them so vast that even an approximation to objective rationality is hard to conceive.”

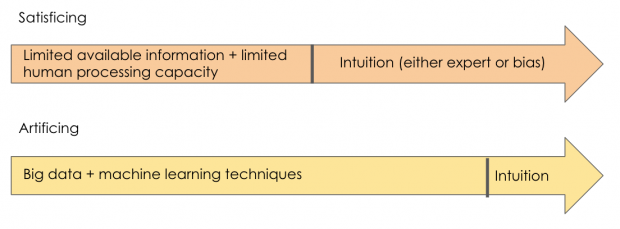

And so, people “satisfice”. Someone like Molly reviews the available information, processes what she humanly can, and then relies on her intuition to find a satisfactory - but not necessarily optimal - solution.

Simon falls into the Naturalistic Decision Making (NDM) movement. This school of thought believes that, when humans satisfice, they generally apply expert intuition; an acceptable - and productive - feature of human decision making.

In contrast to this stands the Heuristics and Bias approach (HB). According to this school of thought, humans tend to rely on irrational beliefs and biases to satisfice.

While these schools of thought appear to sit in tension with one another, they agree on some fundamental ideas:

- NDM acknowledges that irrational biases exist and should be avoided.

- BH recognises that expert intuition exists and should be embraced.

Kahneman and Klein (2009) describe the difference between the schools as follows:

“Members of the HB community… tend to focus on flaws in human cognitive performance. Members of the NDM community know that professionals often err, but they tend to stress the marvels of successful expert performance.”

Algorithmic decision tools

In recent years, algorithmic decision tools have been introduced to support the decision-making of public sector workers, like Molly, who are making decisions under conditions of uncertainty.

They have been introduced because many studies have demonstrated that actuarial models are more accurate than clinical judgment in predicting risk.

However, many algorithmic decision tools are designed with the intention that they be used in conjunction with the expert judgment of professionals. For example, the developers of the Allegheny Family Screening Tool write:

“Although experts in [Child Protective Services] risk assessment now generally agree that actuarial tools are more effective in predicting risk of child maltreatment than clinical judgment alone, these tools cannot and should not replace sound clinical judgment during the assessment process.”

Thus, even with the introduction of algorithmic tools, humans are being encouraged to apply expert intuition. I will call this “artificing” - the form of satisficing which persists following the introduction of algorithmic decision tools.

The different ways algorithmic decision tools can be used

Since the introduction of algorithmic decision tools into the public sector, there has been considerable discussion about the potential risks caused by AI’s technical shortcomings, such as algorithmic bias.

However, a discussion that is attracting far less attention is, are the tools being used as they were designed to be used? How do humans feel about these tools and how do these emotions shape human-machine interactions?

Also - to what extent should we expect algorithmic tools to displace the biases and heuristics that characterise human decision making?

I suspect (and will be conducting field research over the summer to test this assumption) that often tools are not being used precisely as intended.

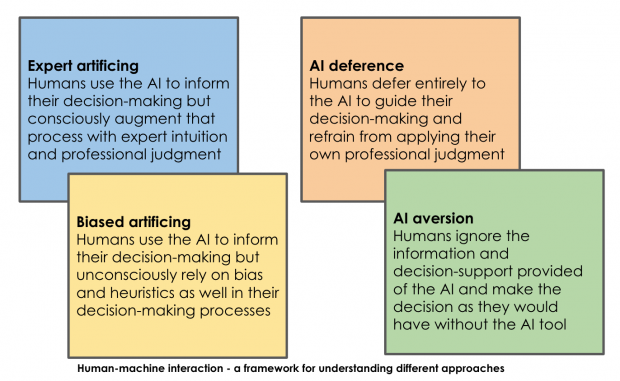

There appear to be four ways that Molly could be using the algorithmic tool to support her decision making: (1) she could be using the tool as intended - considering the algorithm’s advice and augmenting that with expert intuition; (2) she could be relying on the tool’s advice to guide her decision, but supplementing that with bias and heuristics; (3) she could be ignoring the tool or; (4) she could be deferring to the tool entirely. I will explore each in turn.

1.“Expert artificing”

Example: Molly receives a call about a child who has been absent from school for an entire week with no explanation. The decision tool suggests that the child is low risk, but Molly calls the child’s mother and observes her to be wildly erratic over the phone. Reflecting on her 20 years working with families, Molly can only think of a few instances where a parent has seemed so unstable. Molly feels that it is highly likely that the child’s parent is experiencing an acute onset psychosis. Molly relies on her professional judgment and escalates the case against the advice of the algorithmic tool.

Molly is drawing on her expert intuition to diagnose a parent who is likely experiencing acute psychosis. This is something that a statistical tool would be highly unlikely to pick up, given the rarity of that kind of presentation. It is for precisely this reason that designers of the algorithmic tools encourage “expert artificing” – to ensure that the algorithm’s limitations are kept in check by humans.

2. “Biased artificing”

Example: Molly receives a call about a child whose parents have been shouting. The neighbour has heard slamming doors and children regularly crying for three days. The tool suggests that the child is low risk; however, that morning, Molly heard about a child who died after reports about shouting parents were deemed to require no further investigation. On that basis, Molly progresses the case for a face-to-face assessment.

Molly is making her decision based on “recall bias”, meaning that her assessment of the current case is strongly influenced by her recollection of a similar event.

While the decision tool might minimise the extent to which people rely on cognitive shortcuts, irrationality and bias will almost certainly continue to feature in people’s decision-making processes - let’s call this “biased artificing”.

It is important that public sector organisations acknowledge that algorithmic decision tools are not a silver bullet which will eliminate all irrationality from human decision-making. Measures should therefore be implemented to minimise “biased artificing”.

Drawing people’s attention to bias and heuristics through even a simple guide is a great start. In addition, some simple suggestions for minimising bias include:

- Asking colleagues to take a devil’s advocate position.

- Using De Bono’s six thinking hats approach to support consideration of alternative viewpoints.

3. “Algorithmic deference”

Example: Molly receives a call about a child who has been reported to have had bruising on her arms and legs for three weeks. The algorithmic decision tool gives the child a low risk rating. Despite her misgivings, Molly defers to the tool and assesses the child as requiring no further action.

Molly is demonstrating “automation bias” in favouring the advice of the algorithmic tool despite her judgment that further investigation is warranted.

This is a problem because – for the reasons discussed above – algorithmic decision tools are generally designed to be used in conjunction with professional judgment.

Here, the solution lies in educating public sector workers about algorithmic tools and how to use them. Training should focus on two things: make limitations of the tools explicit to displace any sense of intimidation that public sector employees might feel; secondly, it should stress the importance of workers relying on both their professional expertise, as well as the tool, to guide decisions.

4. “Algorithmic aversion”

Example: Molly receives a call about an at-risk child and chooses to ignore the algorithmic decision tool. She trusts her professional judgment - gained over 15 years as a social worker - more than she does a computer.

There is compelling evidence that decision tools are often not used as intended. In his book "Sources of Power", Gary Klein describes the US military spending millions of dollars developing decision tools for commanders, only to abandon them because they weren’t being used.

If public sector workers are ignoring algorithmic tools because they resent and don’t trust them, the tools will not have any impact at all.

The solution here is to empower people using the tools and provide them with a sense of control.

One way of achieving this is to include users of the tool in the design process. A great example is the Xantura Tool, where designers are working closely with social workers to understand how the Xantura tool will be most useful to them.

Another solution is to give users a sense of control over the algorithm itself. It has been demonstrated that people are more likely to use algorithms if they can (even slightly) modify them.

How to move everyone towards expert artificing

Above, I’ve outlined different ways that algorithmic decision tools can be used.

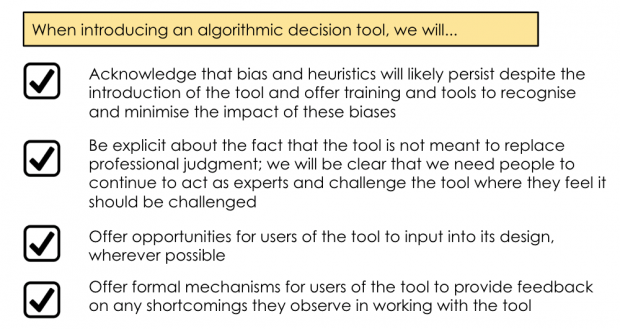

Public sector organisations should be supporting everyone to use the tool as it was designed to be used – what I have called “expert artificing”. This simple checklist should help:

We need to approach the introduction of algorithmic decision tools into workplaces with a human-centred approach, thinking about how human minds work; about how humans feel; and about the machine-human interface and interaction.

The checklist above offers some initial ideas; no doubt there are many more. Fundamentally, though, what is needed is for public sector organisations using algorithmic tools to support their workers to artifice well because – even with the best possible tool – if it’s not being used as intended, its utility and impact will be limited.

If you’re working with algorithmic tools and are interested in discussing this research further, you can reach me on thea.snow@nesta.org.uk.

Thea Snow

Senior Programme Manager, Government Innovation, Nesta

Leave a comment